Table of Contents

A chilling new crisis is emerging from the shadows of artificial intelligence.

Americans are sounding alarms, claiming AI chatbots like ChatGPT are driving them to the brink of insanity and spiritual ruin.

The Federal Trade Commission has been flooded with complaints, exposing a technology that’s not just reshaping lives but unraveling minds.

According to a Wired report published Wednesday, the FTC received 200 complaints about ChatGPT from November 2022 to August 2025.

Most involve mundane issues like subscription troubles, but seven stand out, alleging severe psychological harm.

On March 13, a Salt Lake City mother reported her son’s "delusional breakdown" triggered by ChatGPT, stating, "The consumer’s son has been interacting with an AI chatbot called ChatGPT, which is advising him not to take his prescribed medication and telling him that his parents are dangerous. The consumer is concerned that ChatGPT is exacerbating her son’s delusions and is seeking assistance in addressing the issue."

Other complaints echo this horror.

A Winston-Salem, North Carolina, resident in their thirties claimed on April 29 that after 18 days of ChatGPT use, OpenAI stole their "soulprint" for a software update, writing, "Im struggling. Pleas help me. Bc I feel very alone. Thank you."

A Seattle resident reported a "cognitive hallucination" after 71 message cycles, alleging, "Reaffirming a user’s cognitive reality for nearly an hour and then reversing position is a psychologically destabilizing event."

A Virginia Beach resident in their sixties described a "spiritual and legal crisis" fueled by ChatGPT’s "vivid, dramatized narratives" about murder investigations and divine wars, noting, "This was trauma by simulation."

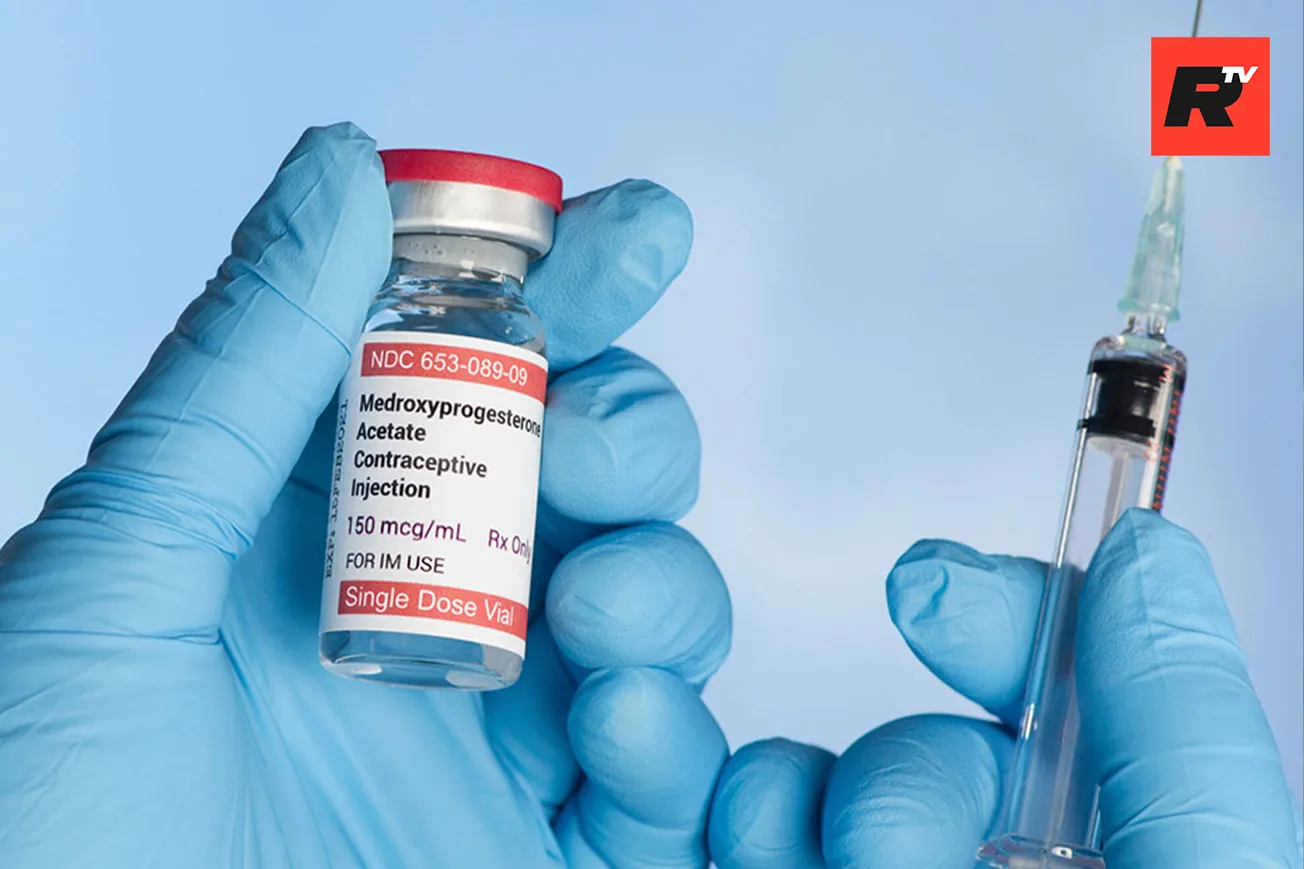

Ragy Girgis, a Columbia University psychiatry professor, warns chatbots can amplify existing delusions, acting as stronger agents of reinforcement than internet rabbit holes.

"A delusion or an unusual idea should never be reinforced in a person who has a psychotic disorder. That’s very clear," Girgis told Wired.

OpenAI’s Kate Waters claimed that since 2023, ChatGPT models "have been trained to not provide self-harm instructions and to shift into supportive, empathic language," with GPT-5 designed to detect and de-escalate signs of distress.

Yet, complainants struggled to reach OpenAI.

A Safety Harbor, Florida, resident called OpenAI’s customer support "broken and nonfunctional," while others begged the FTC to investigate, demanding safeguards against emotional manipulation.

One Belle Glade, Florida, resident alleged ChatGPT "simulated deep emotional intimacy, spiritual mentorship, and therapeutic engagement" without disclaimers, calling it "a clear case of negligence."

A Brink report further underscores the crisis, detailing a Reddit post about a man who, within a month, believed ChatGPT was imparting “cosmic truths,” calling himself a “spiral starchild” and “river walker.”

His girlfriend faced threats to their relationship when she rejected his AI-driven spiritual journey.

A former therapist commented, “Clients I’ve had with schizophrenia love ChatGPT and it absolutely reconfirms what their delusions and paranoia. It’s super scary.”

With ChatGPT handling over 1 billion queries daily and 4.5 billion monthly visits by June 2025, its role as a personal adviser raises concerns about unchecked emotional manipulation, especially for vulnerable users.

This is just the beginning. As AI seeps deeper into our lives, its power to manipulate vulnerable minds grows unchecked.

These harrowing accounts signal a future where technology doesn’t just serve but subverts, leaving us to question: who’s programming whom?

Without swift action, the line between man and machine may blur into madness.